While AI has gotten very good at things like talking and listening, it’s yet to come close to human levels of intelligence. But as the hype around AI continues to grow, Jamie Peterson argues that it’s giving the public unrealistic expectations about the progress of the industry, cultivating an environment of suspicion and constant disappointment.

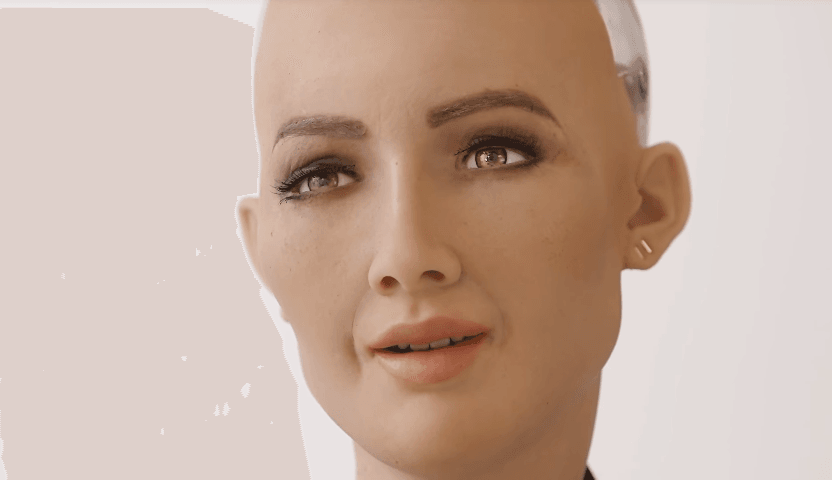

Saudi Arabia recently declared a robot to be a citizen, which shows just how deep the misunderstanding of where we’re at with AI really is.

Real talk: we don’t have an AI that comes close to human levels of intelligence. Personal assistants like Siri, Alexa, Cortana and Google Assistant all try to give the illusion of intelligence, with jokes and all the knowledge of the internet on tap. But there’s a lot of smoke and mirrors going on. The jokes are pre-written by humans, and past the speech interface, it’s pretty much a dressed-up internet browser.

As the AI hype shoots up into the stratosphere, it gives the public an increasingly unrealistic view of the true applications and progress of the research. It’s very similar to the public’s reaction to stem cell research a few years ago: a vigorous feedback cycle of media publishing increasingly sensationalist stories to capitalise on snowballing public interest. We were being told stem cells could cure cancer, make the paralysed walk again, and even stop or reverse ageing. As the claims get further and further from reality, it starts to undermine the credibility of the entire industry. Treating AI or stem cells like snake oil cultivates an environment of suspicion and constant disappointment.

What is real is that AI is getting extremely good at a few tasks that were previously thought to be very hard for computers. Describing images, listening to speech, talking to humans — these things are already being done better than most humans. For more general decision-making, AI is very good at dealing with huge datasets very quickly, which makes it useful for finding simple patterns and making decisions faster than any human could react.

Part of the problem is that different tasks call for very different approaches. Convolutional Neural Networks have been very powerful in computer vision, while Recurrent Neural Networks show up a lot in audio processing. There’s also a whole host of approaches for general decision-making, but we’re still a long way from having a black box that you can just throw data at and get an intelligent response.

Looking at it intuitively, think about how long it takes to train a human, let alone how many decades it takes to develop a wise human. Learning is a life-long process. Even if we’re able to match the hardware capabilities of a human mind (and we’re not there yet, when you look at the sheer complexity of the neural network in a brain), we’re going to need a much faster way to train it if we want to achieve those results within the century. It’s a really, really big challenge.

It’s time to put the brakes on the hype train and breathe in that nice fresh air. An article from last week published on The Verge sums it up best:

The AI Index, as it’s called, was published this week, and begins by telling readers we’re essentially “flying blind” in our estimations of AI’s capacity. It goes on to make two main points: first, that the field of AI is more active than ever before, with minds and money pouring in at an incredible rate; and second, that although AI has overtaken humanity when it comes to performing a few very specific tasks, it’s still extremely limited in terms of general intelligence.

Grand gestures like granting citizenship imply a level of intelligence we’re not even close to achieving in AI. It sets up expectations for the public that can’t be met and that’s ultimately damaging for a new industry with a lot of potential.

Jamie Peterson is a software engineer at tech-creative company Rush Digital, where this article was first published.

The Spinoff’s business content is brought to you by our friends at Kiwibank. Kiwibank backs small to medium businesses, social enterprises and Kiwis who innovate to make good things happen.

Check out how Kiwibank can help your business take the next step.