Does an AI art generator respond as effectively to a client’s brief as a human illustrator? Tim Gibson took some of his own creative commercial work and went up against the bots.

The bots are coming and they’re after our jobs. We knew they were coming after the truck drivers, check-out people and call-centre staff, but I thought as a creative I would have longer to prepare. Now, Artificial Intelligence (AI) art programs like Dall-E 2 and Midjourney are storming my little ivory-illustrator tower.

Is the risk real? If so, are artists just the first creative foot soldiers to get mown down in the front line? Or are AI Art Generators just a new tool in the creative’s toolset?

Just a few years ago illustration and design were listed alongside nursing and social work as industries least likely to be impacted by AI-related job losses. Maybe they had another 20 years. But illustration’s life expectancy has been shaken up by the invasive disrupter that is AI art generators. In an attempt to gauge the current sophistication of these systems, and get a sense of their competitiveness versus a human counterpart I’ve run a few totally biased and unscientific tests of my own.

I’m giving the Dall-e 2 AI art generator illustration briefs I’ve already completed, and then we’ll semi-objectively gauge the results vs the real-world artwork.

First, it’s probably helpful to compare and contrast the traditional way of sourcing artwork for your beer label/gig poster/marketing campaign vs this new AI “prompt” system. In both instances, someone at a company decides they need illustration to express their ideas. Then they might gather a few smart broad thinkers, throw some ideas around internally, decide what the artwork needs to achieve, who it should appeal to, some specific content ideas and maybe an idea of art style (impressionist painting vs 1950s comic book etc).

Here the two pathways diverge.

If you’re using a human artist you’d first try and pick the right person with a good artistic alignment for the style and energy needed. After some negotiation around budget and overuse of the term “excitement”, you’d get a contract signed and set them loose. If the illustrator is broad-minded they might “yes, and” the client’s ideas, spin ideas in new (better?) wild card directions or carefully and collaboratively improve the brief before they even pick up their pencils.

Within a day or three the client might get some rough artwork back, and depending on how close to the client’s needs and wants the artwork is, things might go back and forth a few times to narrow down to a final, polished piece of work that reasonably satisfies everyone involved. Good things take time, but the process might take two to four weeks.

However, for a non-human illustrator, the client takes the brief and condenses the core subject matter into short, visually descriptive sentences and feeds that to the AI. The AI, in literal seconds, spits back some visual hot takes. It’s worth noting that while the AIs themselves are often built in a way that restricts them from creating obviously objectional artworks (some hot-button terms can’t be used in a prompt etc), they also can’t push back on, or redirect, bad ideas – which, let’s be honest, clients do sometimes give.

If the AI’s results are not right the client could either change their written inputs to re-direct the AI or ask for variations on an artwork that’s the closest to what they want, hoping that serendipity will take the work closer to the desired goal. Trying a different AI is also becoming a viable option.

Now, as well as an illustrator I’m also a branding guy and an art director who often selects and guides other illustrators for clients, so I’ll try my best to wear that hat (not my scared illustrator beret) as I give the Dall-e 2 AI “an exciting opportunity for exposure” by completing some old briefs of mine.

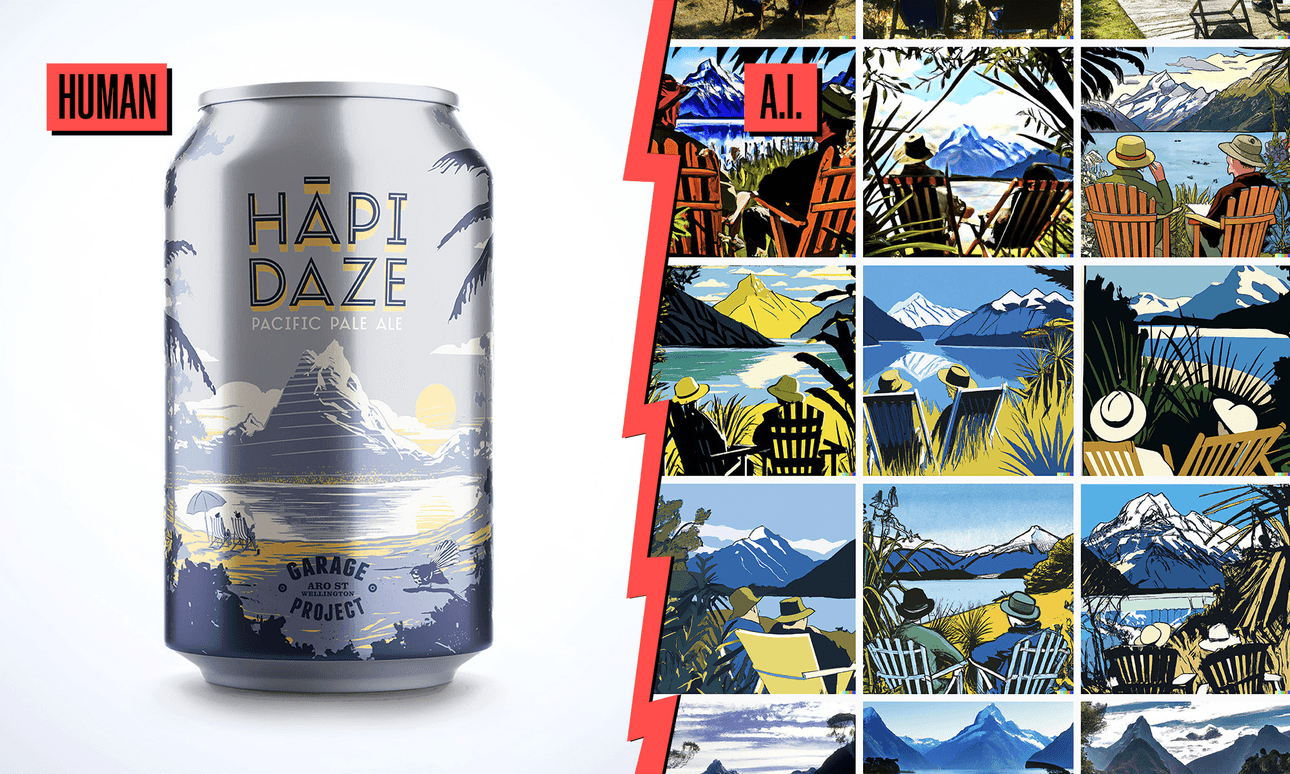

HĀPI DAZE

Original prompt: “A view from the New Zealand bush out to a lake and Mitre Peak, crisp afternoon light, two men sitting by lake in deck chairs, in style of 1930 tourism poster”

AI Result: 4/5 Stars. I had to get more specific with the placement of things and replace “1930’s tourism poster” with colour directions and the term “screen print” to escape the photography style it was pushing. It assumed people on deck chairs 100% wear hats (which is interesting), and it did a great job on NZ-specific-looking bush and the general scene. They do feel a little “school project” to me, but some are heading in interesting directions.

BOSS LEVEL

Original Prompt: “A giant Gundam-style robot, in silhouette with glowing little highlights, standing in a large and dark sci-fi corridor, flashing lasers and lights on walls, roof and floor, Synthwave art style.”

AI Result: 3/5 Stars. I think being able to give very specific art style and content references helped, but I (standing in as art director/client) had no success getting certain notes or elements into the piece. It felt a bit like working with a very talented illustrator who doesn’t read their emails properly. I have no idea where all the glowing crucifixes came from – perhaps from Justice’s synthwave album covers?

TSUYU SAISON

Original Prompt: “A swirl of orange koi fish swimming on a flooded cobbled street, with the reflection of a businessman with an umbrella looking down, traditional Tokyo. Ukiyo-e style Japanese print.”

AI Result: 1/5 Stars. I felt like I was bumping up against a limitation that the AI has that a human doesn’t – the ability to combine multiple ideas and elements into a single illustration. I reworded and edited this prompt many times and it was only when I simplified the requests by removing specific content that the results started looking better. Not really fit for purpose.

CAT’S PYJAMAS

Original Prompt: “Large number of cartoon cats, standing on hind feet like people, some in costumes. Black and white, linework, illustration style like Matt Groening. One cat in middle is wearing pyjamas”

AI Result: 1/5 Stars. Things got more fun when I eventually added: “cats having a fun costume party” to the prompt, but overall it’s either devoid of life or a total horror show and it never found the sweet spot in between.

PERNICIOUS WEED

Original Prompt: “A giant monster made out of hop vines leaning over 3 classic cars, in a hop field, the sky is dark and filled with stars and a full moon, in the style of Tim Gibson.”

AI Result: 3/5 Stars. For shits and giggles I added myself as a style reference, in the same way you might use “Keith Haring” or “Rita Angus”. The Dall-E result suggests the possibility that, despite me wantonly exposing my data to giant tech companies via years of phone, social media and smart home interactions, Open AI/Dall-E doesn’t know who I am. Which is comforting, humbling and insulting all at once.

Ego aside, it got confused by where the scene/setting ends and the monster begins, but nevertheless, there are some very interesting stylistic looks it has proposed, which would be fun to explore.

BOSSA NOVA T-SHIRT (So exclusive it was never printed)

Original Prompt: “A gleeful toucan sitting on Carmen Miranda’s fruit headdress and throwing pieces of bright fruit everywhere with its wings, making a real mess.”

AI Result: 3/5 Stars. The photo-real images of Toucans making a mess are high-grade deep fake level. The AI couldn’t execute on the complexity of all the prompt requirements, and apparently didn’t get the reference to Carmen Miranda, or her fruity head attire. I eventually replaced that phrase with “fruit-hat headdress” for better results. Lots of fun developments though, with some unrequested anthropomorphism thrown into the mix.

The life-size toucans messing with the toucan-headed figures are veering into fever-dream territory, which is a hard no for most commercial illustrations.

So where is AI art at?

Can AI draw? Heck yes and, depending on the AI, it can produce great paintings or photoreal results. Can AI brainstorm? Yes, but like a really disruptive staff member who only listens periodically in meetings and goes on weird tangents when they’re holding the whiteboard markers.

And AI is ludicrously fast at producing work. It might not be what you asked for, and it probably won’t have upgraded your original brief like a good human creative can, but you could burn through a lot of artwork ideas in a fraction of a normal creative engagement. For free.

Do illustrators need to put down their pencils and cancel their Adobe subscriptions? Do we need to picket organisations that outsource their creative work to big tech companies? Well, sort of. A few publications have already been publicly shamed for saving on art budgets by passing over humans in favour of AI. That some of these choices have been made by journalists and editors – one step away from facing similar AI challenges themselves – is worth noting. What’s that quote? “First, they came for the Socialists, and I didn’t speak up because I wasn’t a Socialist…”

Thanks to the AI revolution, stock photography (which is already flooded with content and creatives and not enough cash) could find itself totally wiped out. Most of the marketing you see around these art generators are examples of great executions of simple ideas: “a blue apple”, for example, or a “storm in a tea cup”. I would be surprised if a big stock imagery marketplace doesn’t license from, partner with, or even buy an AI art generator and use it to slowly replace their own stable of human photographers. Just like Uber’s very obvious intent to inevitably replace their human drivers once self-driving cars are viable.

Then there’s Midjourney, an alternative to Dall-e 2 which would be scary if I was still a fantasy/film concept artist.

Creative agencies and small start-ups will already be integrating this stuff into their early explorative creative work, in the same way that mood boards are currently used.

Still, right now, I’d say using AI for the final work is a bit like working with an enfant terrible. Great if you’re just following the bliss and are relaxed about what you end up with. For those who need to design by committee, or at least have multiple people’s requests embedded into the creative work, a human is still the answer. But this tech innovation has all happened incredibly fast. So ask me again in a month.

Like a lot of industries, the tech companies in this space aren’t going to slow their roll, and the smaller companies who use creative staff and freelancers will be considering the speed and cost efficiencies. The only thing that will have any real influence on the continued existence of creative professionals is how we as a society – and as individuals – choose to value human creativity. Go hug an illustrator, today.