In the second episode of Actually Interesting, The Spinoff’s new monthly podcast exploring the effect AI has on our lives, Russell Brown explores how aware machines really are.

Subscribe to Actually Interesting via iTunes or listen on the player below. To download this episode right click and save.

Here’s a thing to understand: artificial intelligence isn’t magic and machines can’t really think or reason – at least not in any world you or I might actually live in. But machine learning can make computers spectacularly good at specific tasks, in ways that might make the world we do live in a better place.

“We’ll keep calling it AI, but what it really is, is pattern recognition,” says Kane O’Donnell, head data scientist at Hamilton-based Aware Group.

Having machines look at pictures and detect patterns is the basis of one of Aware’s primary services. Which is counting things: cars on the road, people in crowds, widgets on a production line and, in a recent trial, students in a lecture theatre.

The trial was an extension of the company’s work with tertiary institutions, which needed information on how their facilities were being utilised. Doing a count in a 500-seat theatre isn’t a good use of a lecturer’s time, so typically a human would come in once a semester, count heads and leave. But the theatres already had cameras installed. Could they be used?

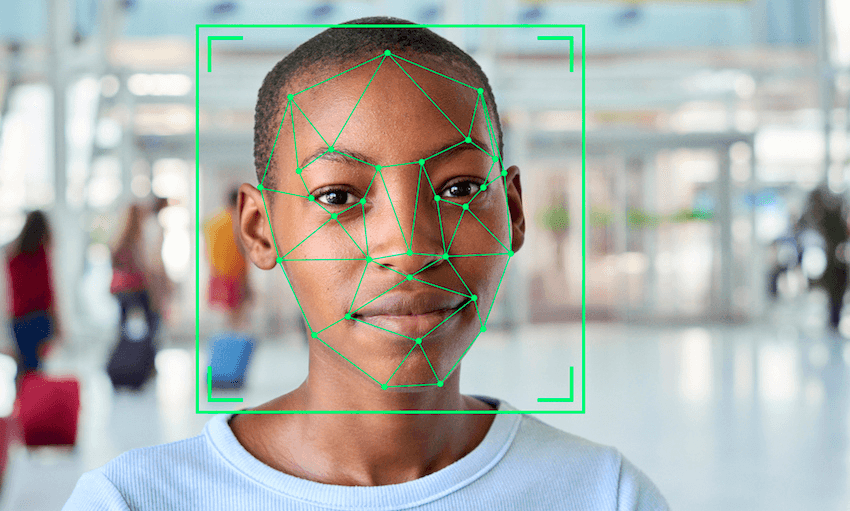

They could: so long as the computer learned the difference between a person and, say, a backpack – which is principally a matter of showing the machine a lot of pictures of humans. This year’s trial went further: it used facial recognition to not only tell how many students were in the theatre, but which students – a virtual roll-call.

You possibly winced when you read that, which is a natural reaction.

“I’m a self-confessed tinfoil hat, I’m pretty careful about privacy,” says O’Donnell, whose educational background is in theoretical physics.

“I wouldn’t want to be on a camera until I was pretty confident that I knew where that data was going and how it was being used. That’s a trust thing – which I think is the biggest thing at the moment around AI. It’s similar with driverless cars – how do I trust that my data is only going to be used for that specific purpose and not to send me targeted ads, for example?”

Behind every machine is a human using the information it collects for a reason. And this is where the problems can arise.

“It’s not AI that’s the problem, it’s that someone has made a choice that they are going to capture imagery from people and do facial recognition – and they might do nefarious things with it. It’s the people who make those decisions, not the specific method that they choose to do it.”

(If that sounds far-fetched as a thought experiment, revisit David Farrier’s reporting for The Spinoff about Albi Whale, whose allegedly groundbreaking AI system was literally a human typing in the background.)

Having a human do the job isn’t necessarily better for your privacy. In Britain, police employ “super recognisers”, people with particularly acute recognition skills, to spot faces in crowds. That can go wrong – what if a super-recogniser spots a person they know in real life, a friend, an enemy? The AI might turn out to be the more discreet, unbiased observer.

Which isn’t to say that O’Donnell and his team don’t spend a lot of time thinking about what could possibly go wrong with the AI itself.

“There are some key features around AI which make things really challenging. It’s just pattern recognition and it’s very hard to explain why it made a decision. It’s kind of like a black box. If I train something to recognise whether it’s a sheep or a bird it’s seeing – tell me how you would decide whether this was a sheep or a bird?” O’Donnell asks.

Ah, how many legs it’s got…

“Okay, that’s great, that’s a reasonable interpretation. That’s what we would reasonably expect. However – and there are some really good and often funny cases of this – that can be totally incorrect. What the model is actually doing is saying ‘whenever you gave me a picture of a sheep there was a green background and of a bird there was a blue background, so actually I can be 100% right by just saying, if there is more green than blue in the picture, it’s a sheep and if there’s more blue it’s a bird.”

And this is where problems arrive. That interpretability becomes a real challenge, because if you can’t say why it’s making a decision, then you open yourself up to all the considerations around bias. And the other aspect of machine learning is that you can’t guarantee the right result.

If, for example, a driverless car sees something it hasn’t seen before – say, someone walking across the road on their hands – it doesn’t necessarily reason that it’s an inverted person.

“I don’t want to imply that things are all terrible and your Tesla’s going to drive off the road, because they’ve driven billions of miles and they’ve trained their models and it’s performed just right in all those miles. So if you’re driving a particular mile, then maybe there’s a one in a billion chance that it hasn’t been something it’s seen before and things might go poorly. But actually if you’re like the majority of people, then it’s probably going to go fine and it’s going to do a really useful thing.”

And there are useful things machines can learn to do. If you are looking at a particular skill or pattern, machine learning can get to the point where it is better than humans.

“Medical is a really big one. In medical imaging, a machine learning system can be more accurate than some doctors in detecting things like cancer. There’s all the genomics work that’s going on.

“But in all those cases, it’s like this is really useful – what’s the consequence if I’m wrong? With medical especially, you’re not going to trust any model without getting a person to review it.

“And that’s generally what we recommend. If it is something really sensitive, you’re recommending whether that person gets chemo or not, that should not be something you trust your model with. Almost at any point, even if you’ve had 10 years of success.”

So, to return to our original example, the consequences of getting a head-count wrong by one, two or 20 are comparatively benign. But what if you used your AI system to ask whether students are not only present but paying attention?

“That’s totally feasible. You could use that for good purposes and say, whether they’re engaged is a predictor of how likely they are to succeed. The student support team could jump in early on and help them before they fail the exam. At the moment, they only know someone’s having trouble when they fail.

“Or it could be used in a really bad way – for punitive measures.”

But even then, you have to be really careful when asking what these results actually mean.

“Someone might be unengaged because they’ve given up caring about this course. Or they might be having trouble at home and their mind is on different things. Or maybe – and this is where it gets sensitive – the particular lecturer is just a very unengaging person.”

This content was created in paid partnership with Microsoft. Learn more about our partnerships here.