When it comes to tackling online extremism, part of the answer rests with us – those who use the internet every day. New York Times tech columnist Kevin Roose talks about his new podcast Rabbit Hole.

The Christchurch terror attack shocked New Zealand, and the world. But there are many things about the way the attacker brought some of the worst parts of internet culture to life that have become all too familiar.

The filming and streaming of the attack, and the shooter’s online manifesto, showed obvious links to online radicalisation, and what can happen when that radicalisation manifests as violent terrorism. Many of the same characteristics were seen in a spate of attacks in 2018 and 2019: the Tree of Life Synagogue shooting in Pittsburgh, the El Paso Walmart attack, and the Poway Synagogue shooting in California.

Rightfully, this cluster increased scrutiny of the part played by online platforms. And in the immediate wake of the March 15 attack in Christchurch, the prime minister, Jacinda Ardern, started to look for ways to combat these issues.

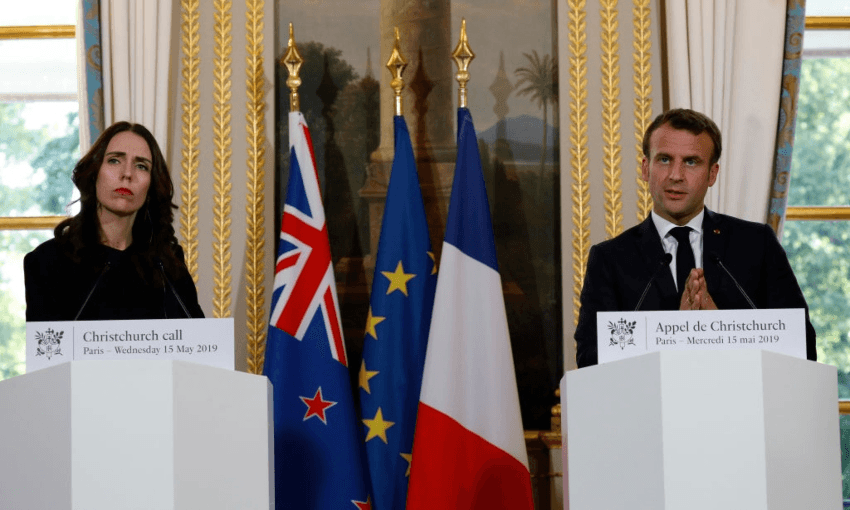

Friday marks one year since Ardern, and her French counterpart Emmanuel Macron, launched the Christchurch Call at a summit in Paris. More than 50 countries, including supranational organisations, and eight tech companies have now committed to eliminating terrorist and violent extremist content online. But delivering on these types of agreements is slow going, and changing regulation is complicated. Add to that the challenge of working with opaque, and often difficult, tech giants.

Despite potential stumbling blocks, there has been progress on this front: the Global Internet Forum to Counter Terrorism (GIFCT) has been overhauled; civil society, countries and private companies have come together to form expert working groups focusing on specific online issues; and the prime minister has appointed Paul Ash as her special representative for cyber and digital – essentially he’s the country’s first-ever tech ambassador.

But governments, multi-stakeholder agreements and regulation can only do so much. New York Times tech columnist Kevin Roose says accords like the Christchurch Call lay the groundwork for better online conversation. Keeping the pressure on social media companies, so they know it’s up to them to stop their algorithms from steering people in dangerous directions, also plays a part. But fixing this problem is largely up to us – people who use the internet every day.

Historically, people have changed their behaviour when they become conscious of the system they are operating in, Roose says.

“If you’re aware you are entering a system that is designed in some way to exploit you, you behave differently than if you think this is a kind of magical wonderland, where all the machines work perfectly, and all the content is great, and everything is totally personalised to your interests.”

Roose says he wasn’t surprised when he saw this online radicalisation and exposure to violent extremist content manifest in the recent cluster of killings.

Since 2015, he’s been watching extremist online communities become increasingly violent, and examining the links between online extremism and offline radicalisation and violence. Over the years, Roose worried he was getting side-tracked by fringe online culture, and learning way too much about weird and toxic parts of the internet, as a curiosity. All of a sudden that information became valuable.

“It turns out learning about something like how 8Chan operates is pretty key to understanding these violent events that affect people all over the world.”

Following these killings, he felt a duty to use his knowledge to help people better understand why and how this was happening. So he and New York Times producer Andy Mills created the podcast Rabbit Hole.

“It felt like in order to understand why someone might post a video of themselves shooting people in a synagogue and stream it online, and post a manifesto, you kind of had to understand this whole universe, internet culture, and the ways in which it’s changed.”

It’s a big ask of a podcast, but Roose hopes Rabbit Hole will help people overcome what he refers to as a “language barrier”, in a way that resonates with everyone – whether they spend 12 hours a day on Reddit, YouTube and 8Chan, or barely engage with social media.

“Part of the job of being a journalist is translating between different groups of people who have not understood each other all that well.”

While everyone is affected by the internet, most people won’t end up committing acts of terrorism as a result. So Rabbit Hole also delves into the subtle ways online systems affect and change people’s behaviour and preferences – something that’s really hit home with listeners.

A lot of people have experienced their own beliefs and behaviours changing as a result of what they’re experiencing on the internet, Roose says, adding that this is more pronounced right now, with everyone stuck at home and spending more time online.

If there’s one thing he hopes listeners take from the podcast, it’s the ability to think more deeply about the role algorithms play in shaping their everyday lives – think Spotify’s Discover; Netflix’s Recommendations, or TikTok’s “for you” page.

“What often happens is we mistake these as algorithms that are designed to read our minds and figure out what we already like, and we miss the fact that many of them, they’re actually changing our minds.”

As a result, most people’s preferences are a hybrid of what they actually like and the things a machine or an algorithm has told them to like.

Most people know how humans have shaped the internet, but what’s still somewhat of a mystery is how this tool is shaping us. If people can become more conscious of how the internet works, and its impact on human behaviour, they can make more educated choices. With this knowledge, could come the empowerment to reshape this tool into a force for more good and less evil. And it seems there is already movement in the right direction.

Since the March 15 attack in Christchurch, the launch of the Christchurch Call, and a growing awareness of online extremism, the big tech platforms like YouTube, Facebook and Twitter have changed their tune. The PM’s special representative for cyber and digital Paul Ash says the Christchurch attack presented a moment for government and industry to work together.

“The tech industry didn’t want these problems on its platforms any more than we did.”

And while there is a lot more work to be done, those working in this area say the tech giants are aware of the problems they’ve created and are doing something to address that. Roose says these changes have given him optimism, albeit the tempered kind.

Unfortunately, there’s still a lot of awful content online, and in order for Roose and others like him to keep breaking down the language barrier, they have to spend a lot of time in some pretty awful corners of the internet.

Like others who’ve spent many hours online, Roose has become largely desensitised: “There’s not that much that shocks me any more.”

But he doesn’t want to be put on a pedestal for doing his job.

“I have colleagues who put themselves in harm’s way, in much greater ways than I do.

“I sit at a computer and look at memes, and I have colleagues who go to war zones and report on pandemics from hospitals… so it’s hard for me to feel too sorry for myself.”

As someone whose job is to be up-to-date on the latest bad meme, quitting Twitter isn’t really an option for Roose. But his self-care does involve balancing his work surfing, and sometimes just logging off.