Two years after David Farrier helped to unearth the exploitation of children on YouTube, the sexualisation of children on the platform continues – and NZ companies are inadvertently appearing alongside it. Oskar Howell reports.

New Zealand businesses are advertising their goods and services alongside softcore pornographic videos on YouTube; they just don’t know they’re doing it.

YouTube’s video recommendation algorithm has always been a subject for debate. No one really knows how it works, meaning YouTube often operates as a lone wolf in the online video world.

Writing for The Spinoff, David Farrier was one of the first to bring the issue of child pornography on YouTube to light in 2017, when he wrote about the online fetish industry that exploited young children for the enjoyment of online predators.

Now, Youtuber MattsWhatItIs has opened up a whole new underworld: softcore child pornography that is being actively pushed towards viewers by the YouTube algorithm.

In a livestream titled “Youtube is Facilitating the Sexual Exploitation of Children, and it’s Being Monetized” he breaks down the way YouTube’s algorithm rabbit hole works, and where things start to go very bad, very quickly. He claims to have detected “a wormhole of a softcore pedophile ring”.

The rabbit hole begins with a simple search. MattWhatItIs started with “Bikini Haul” but anything of that sort appears to activate the algorithm. While it’s a certain kind of person who goes looking for these types of videos, doing so isn’t unlawful. The girls are seemingly over 18, and assuming YouTube’s guidelines are being adhered to, are doing nothing illegal for the millions of views they’re amassing.

But within four clicks of the starting point, you find the first one that clearly crosses the line.

These videos mostly feature young girls, often younger than 10 years old, and unaccompanied. They’re “vlogging”, as the kids call it.

Within a few more clicks, you find yourself in a deeply disturbing world of prepubescent girls and boys. Gymnastics, truth or dare challenges, bath time vlogs, night routines, they’re all there.

YouTube’s “wormhole” tunnels on. Every recommended video features another child. Unless you navigate back to the home page, every new video on autoplay or in the sidebar comes up with the same stuff. This is evident of a broken algorithm, flawed despite years of tinkering.

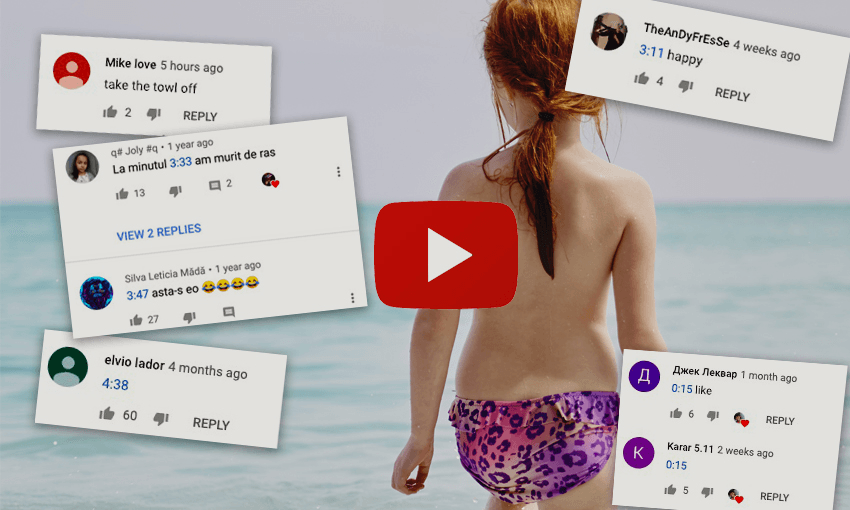

The comments on the videos are telling of the nature of some of the viewers. They’re filled with the little blue links, timestamps during the video where the girls or boys are in suggestive or sexually implicit positions. The comment sections are filled with lewd remarks.

It gets worse. As MattWhatItIs mentions in his livestream, the comments sections also include external links to actual child porn. You know, the kind of stuff that carries a ten year prison sentence in New Zealand. Google Drive links, Dropboxes, other YouTube videos, the whole cheeseboard of deplorable content is available within a few clicks.

YouTube, which is owned by the Silicon Valley giant Google, maintains a strict policy against child pornography. The company made headlines when it updated its minor protection policy, by flagging videos with children and disabling comments. “We strictly prohibit sexually explicit content featuring minors and content that sexually exploits minors,” it said at the time. “Uploading, commenting, or engaging in activity that sexualizes minors may result in the content being removed and the account may be terminated.”

The MattWhatItIs post casts grave doubt on the efficacy of that policy, however, if so many videos are still getting through, ultimately offering a gateway to the viewing and distribution of child pornography.

The way these videos are monetised is via advertising. And that includes advertisements served to New Zealand audiences for local businesses.

When I went “down the rabbit hole”, within a few clicks advertisements for ZM, Les Mills and NZME’s OneRoof platform popped up. The accounts running the videos are profiting off the clicks.

There’s no way that companies whose advertisements appear on such content could be aware of what is going on, let alone endorse it. Corporate advertising relies on YouTube as a third party to ensure the adverts are placed over appropriate videos; the responsibility for the safety of the video participants falls squarely on YouTube.

Unless someone notifies the companies of the nature of the videos and the ecosystem in which their advertisements are placed, they are unlikely to find out just where their advertising budget is going.

The Spinoff this afternoon contacted both Les Mills and NZME, parent company of ZM and OneRoof. Both companies said they had been completely unaware of the issue. An NZME representative said they would be “looking into the situation immediately”. A Les Mills spokesperson said that in light of the news they had paused their advertising on YouTube and “will not resume until we are confident that we will not be associated with such material”.

This latest news is reminiscent of the advertising scandal in 2017 when YouTube was found to be putting corporate advertising over exploitative videos featuring children. Several huge brands, including Hewlett-Packard and adidas, pulled all their ads from the platform in response. At the time, YouTube said this:

“There shouldn’t be any ads running on this content and we are working urgently to fix this. Over the past year, we have been working to ensure that YouTube is a safe place for everyone and while we have made significant changes in product, policy, enforcement and controls, we will continue to improve.”

And yet, two years later, the problem apparently remains.

The onus is ultimately on YouTube to ensure the participants of videos uploaded to their site are safe, and the conduct around the videos be in keeping with its policy. This latest, disturbing, episode is another example of the dangers presented by digital companies that pay so little heed to national borders.

In a statement provided to Newsweek about the MattWhatItIs revelations, a YouTube spokesperson said: “Any content – including comments – that endangers minors is abhorrent and we have clear policies prohibiting this on YouTube. We enforce these policies aggressively, reporting it to the relevant authorities, removing it from our platform and terminating accounts. We continue to invest heavily in technology, teams and partnerships with charities to tackle this issue.”

For information on helping children stay safe online, visit netsafe.org.nz.