Critics have lambasted as “window dressing” a new code of practice for online safety. Issued today with the major US social media players on board, the code has been hailed by Netsafe as “a joint agreement that sets a benchmark for online safety in the Asia-Pacific region”, but New Zealand based internet advocacy groups argue that it looks like an effort to “subvert” the organisation that tackles online harms and operates as the approved agency under the Harmful Digital Communications Act.

The launch signatories are Meta (which owns Facebook and Instagram), Google and its subsidiary YouTube, TikTok, Twitter and Amazon, which owns streaming service Twitch. The self-regulatory code, which obliges the companies “to actively reduce harmful content on their relevant digital platforms and services in New Zealand” will be administered by NZTech, a non-profit industry body that represents about 1,000 technology companies.

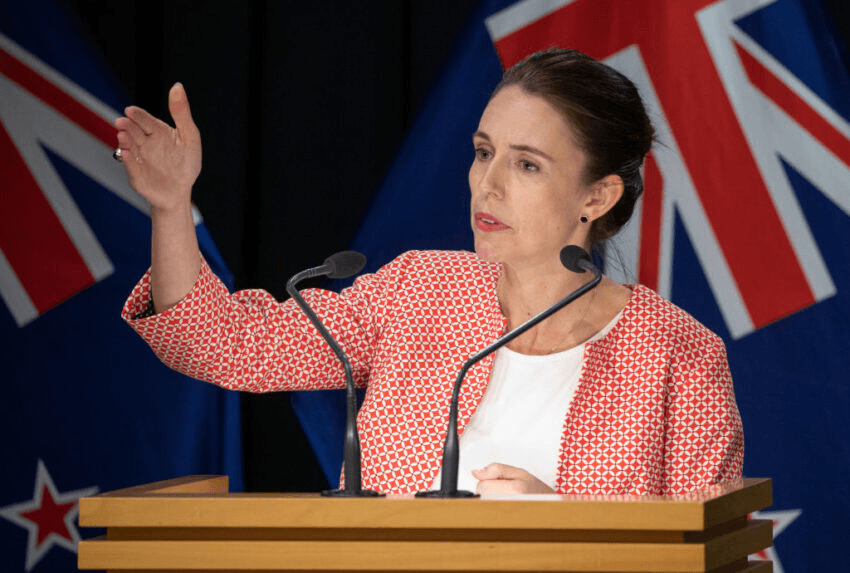

The draft code, and the consultation process through which it was developed, was last year criticised by Internet NZ and advocacy groups Tohatoha NZ and the Inclusive Aotearoa Collective Tāhono. In an interview with The Spinoff earlier this month, the new Netsafe CEO Brent Carey acknowledged the need to “involve more civil society voices”, saying: “When it was first developed we should have involved more multi-stakeholder voices, and that’s what I’ve been looking at already in the role – how to get a broader perspective.”

In a statement on behalf of the three groups today, Mandy Henk, CEO of Tohatoha NZ, said their concerns had not been met by some measure. “This code looks to us like a Meta-led effort to subvert a New Zealand institution so that they can claim legitimacy without having done the work to earn it,” she said. “In our view, this is a weak attempt to preempt regulation – in New Zealand and overseas – by promoting an industry-led model that avoids the real change and real accountability needed to protect communities, individuals and the health of our democracy, which is being subjected to enormous amounts of disinformation designed to increase hate and destroy social cohesion.”

She added: “This Code talks a lot about transparency, but transparency without accountability is just window dressing. In our view, nothing in this code enhances the accountability of the platforms or ensures that those who are harmed by their business models are made whole again or protected from future harms.” Henk questioned whether NZ Tech was equipped to take on the role overseeing the code, saying: “They have no human rights expertise or experience leading community engagements. While we have no qualms with what they do, they are not impartial or focused on the needs of those who are harmed by these platforms.”

Government work on a Content Regulatory Review, overseen by Internal Affairs, continues.